In Minecraft1, a day/night cycle runs for 20 minutes in real-time from dawn to dawn again. That is 72 times faster than our normal cycle on Earth. In the version of Minecraft that I played in 2014, I did not recall seasonal variation and weather patterns but there were different biomes to experience winter and summer and everything in between. In Stardew Valley, a player experiences all four seasons as part of the story, and each season lasts for 28 days in-game time with the clock ticking past 6 hours in real-time per season.

During my free time2, I frequented town/city/country/world-building strategy/survival games3. My most recent ones included Mindustry, Banished, Raft, and Kingdoms Reborn. I played Cities: Skylines in the past and I was obsessed with Rise of Nations in high school. These games share similar charms: you are in control of your future (somewhat), you can build anything you want (kinda), and when you fail, you start again (the best). A restart that is not punishing: no loss of real money, no rebirth, and only several hours of life measured in real-time.

This is not an essay looking for ways to waste one’s personal life with countless micro-optimizations in a video game world because life in the real world is mundane yet unforgiving. This is an essay about a proposal to bootstrap resample history—our own collective history—in millions of iterations. Several years lately I kept thinking about the history of human civilizations. This musing during idle time met with three distant possibilities for a concrete manifestation: (i) the day when I learned the inner workings of a statistical concept called confidence interval and hand-coded its implementation from scratch in Python4, (ii) the third season of Sword Art Online anime series that discusses the concept of soul and time5, and (iii) a recent paper in machine learning demonstrating the concept of Generative Agents6.

In this essay, we will walk through this together as an exercise for our imagination.

Alicization

Most advanced technologies served militaries as their first customer. Artificial Labile Intelligent Cybernated Existence, or simply A.L.I.C.E., followed this military-first business blueprint. Alice was the titular character for the Sword Art Online: Alicization series, both as an artificial human in a fantasy world that was hosted on a one-of-its-kind supercomputer floating in the middle of the Pacific Ocean, and a codename for a highly secretive project to create an advanced artificial intelligence. A.L.I.C.E. the computer algorithm was designed for military purposes, and Alice the tritagonist who grew up inside a manmade fantasy world that simulated human history. These two had their fate tangled, but that is the least of our concerns here.

Artificial Intelligences that we know and admire today are all mostly top-down. A comprehensive collection of human knowledge distilled and compacted into a form that is called “embeddings”7, fed into machines that then regurgitated them back depending on the context. Without prior knowledge, top-down artificial intelligence does not work. It needs a foundation, a rather huge one. This top-down approach comes with limited adaptability; it will struggle to fully process new information because it has not seen it before8.

Project Alicization was born because of the need to synthesize an adaptive, bottom-up, artificial intelligence. It needs to learn, not from mountains of pre-allocated data, but from a series of interactions with surroundings over time. This technique suggests the algorithm needs to run for years, and that is exactly how Rath, the organization behind Project Alicization, ran it. The experiment spanned roughly five human generations. It started with four real human agents descended9 into the manmade fantasy world, nurturing the initial human civilization with tools and trades, and then logged out after dying from a simulated epidemic. Their goal was to let the system run after an initial short period of bootstrapping and to observe them behind a silver screen while sipping coffee.

The project worked. It thrived. Alice was born at the tail-end of the fifth (artificial) human generation, grew up alongside the protagonist and the deuteragonist, happily… but not ever after.

Artificial humans, residents of this simulated world, organized themselves into a society throughout the five-generation period in absence of the initial founding human agents. There were schools, cathedrals, large buildings, all with architecture resembling that of the medieval age.

On the human side, it took Rath a few days to see A.L.I.C.E. developed, or maybe A.L.I.C.E.s since there were tens of thousands of artificial humans including Alice. The project succeeded in demonstrating the viability of the concept, but ultimately halted10 due the plot. Something bad needed to happen to progress the story forward, and I was left disappointed to see what course this artificial civilization would chart.

I wanted to know, should Rath let it run a few days longer, if anyone would suddenly dig resiliently enough to find oil? Or truffles? If Rath ran it for more than a month, would it eventually lead to Nvidia vs. AMD all over again? Honestly, we need more competition in this GPU space.

Generative Agents

The Alicization series was on sale as a novel beginning in February 2012 and then its anime adaptation came out in October 2018. It was unthinkable to think that, at least in our lifetime, that such technological advancement could generate bottom-up artificial intelligence that is adaptable and can self-organize, all this without prior knowledge. Then came Generative Agents, perhaps the first ever proof-of-concept, on a small-scale, that some form of A.L.I.C.E. could possibly be constructed.

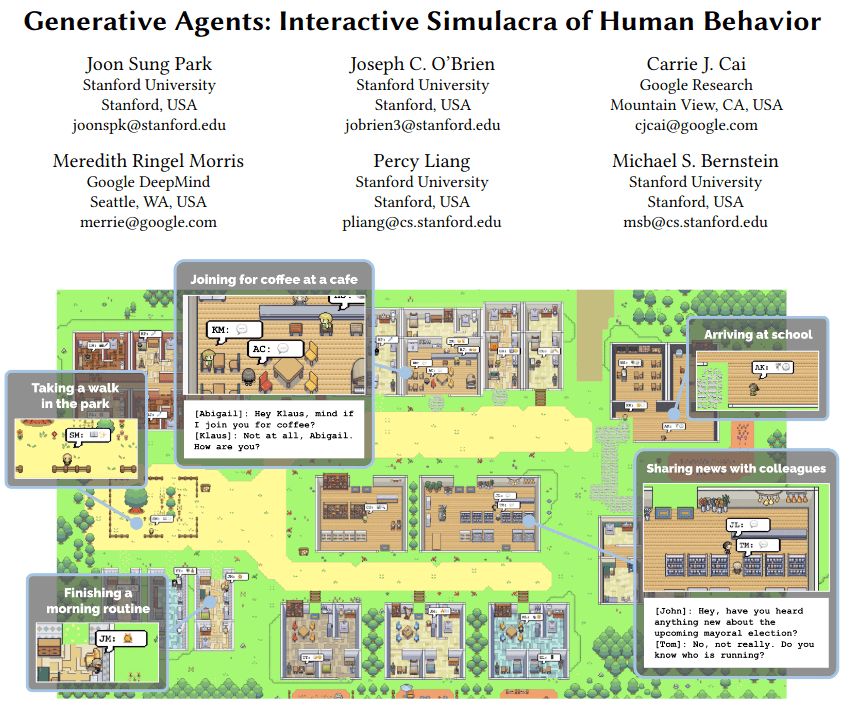

In April 2023, a study was submitted to the preprint repository arXiv, written by researchers at Stanford and Google.

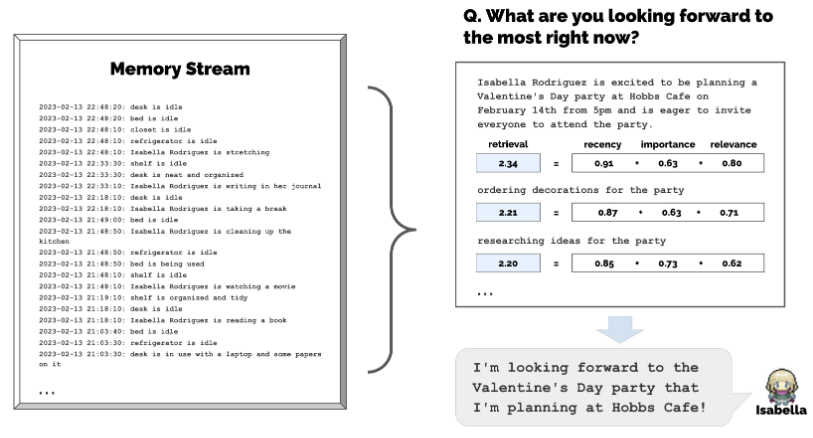

The study starts off by introducing the concept of Generative Agent, that is, a computational software agent souped-up by artificial intelligence that “simulates believable human behavior”.

Accompanying the study is the program itself, available on GitHub at joonspk-research/generative_agents.

Non-playable characters (NPC) enrich gaming experience, and the closer they are to being human-like the more immersive the experience will be. Generative Agents adopted this idea and turned the dial to 11. But, this was not the first time artificial intelligence was recruited. The study discuss prior breakthrough with OpenAI Five for DotA 2 demoed at The International 2018. Generative Agents were of a different breed. OpenAI Five understood tangible rewards that the learning algorithms could optimize for, i.e., winning the game. Generative Agents did not live in the world where winning defines the endgame. In fact, there was no endgame to achieve here.

In a small simulated world of 25 Agents, each customized with a prompt detailing their personality in natural language which initialized their memories, set to interact with other Agents daily. I found it unbelievable that all Agents can plan for a party with relative ease simply by having the admin telling a designated Agent that s/he wants to throw a party, the party happened with all other Agents were invited and they decided to show up. While that being the goal of this project, i.e. to simulate human-like behavior, everybody decided to show up is something that would never happen in real life.

The project garnered positive receptions.

It came out when OpenAI ChatGPT was all the rage and in fact this small simulated world ran on gpt3.5-turbo.

Fast forward to the end of 2024, gpt3.5-turbo model sits low on the totem pole with stronger models only kept showing up.

The next frontier of humanlike behaviors must therefore achieve at least 3 Agents to be tardy and 2 Agents will not show up to a party.

Resampling for 14 Million Times

Understanding confidence intervals can be a tricky thing. I decided to not let this one be yet-another-button-I-click-on-GraphPad-Prism, and it paid dividends after I half-mastered it. That fated day was when I bumped into a tutorial by James Brennan for implementing the calculation for confidence interval via a method called bootstrap resampling, and additionally a tutorial by Erik Bernhardsson on other facets of statistics, generally. It involves, somewhat randomly, taking samples from the original dataset over and over and over and over again, and calculating for the statistics of interest from that pool of data11. That encounter motivated me to handroll a small Python package calculating confidence intervals for a given set of data.

The technical understanding I gained from learning about confidence intervals through bootstrap resampling lent me appreciation on how to not misinterpret it. Amusingly, the phrase bootstrap resampling stuck with me for a little while. What if, assuming limitless computational power and storage capacity, I were to resample the entirety of human civilization since Sumer till 2016 (approx. 6,500 years12 of human history) for 14,000,605 times13 over, would it approximate the course of human civilization close to our current timeline? And if I existed in the other 14,000,604 resampled timelines, did I actually make that jump into the Bitcoin fad in 201014.

At first I was unsure how to differentiate reporting for standard deviation vs. confidence interval. I was enlightened by the simple fact that standard deviation concerns the variations that exist within the sample dataset (the one we took the measurements for), whereas confidence interval is an attempt to conclude what could have happened if we could sample the entire population. When reporting for confidence intervals, a 95% confidence interval does not mean there’s 95% chance that the true value of a population can be found in the interval, rather, if we repeated the sampling process several times, the interval would contain the true value 95% of the time.

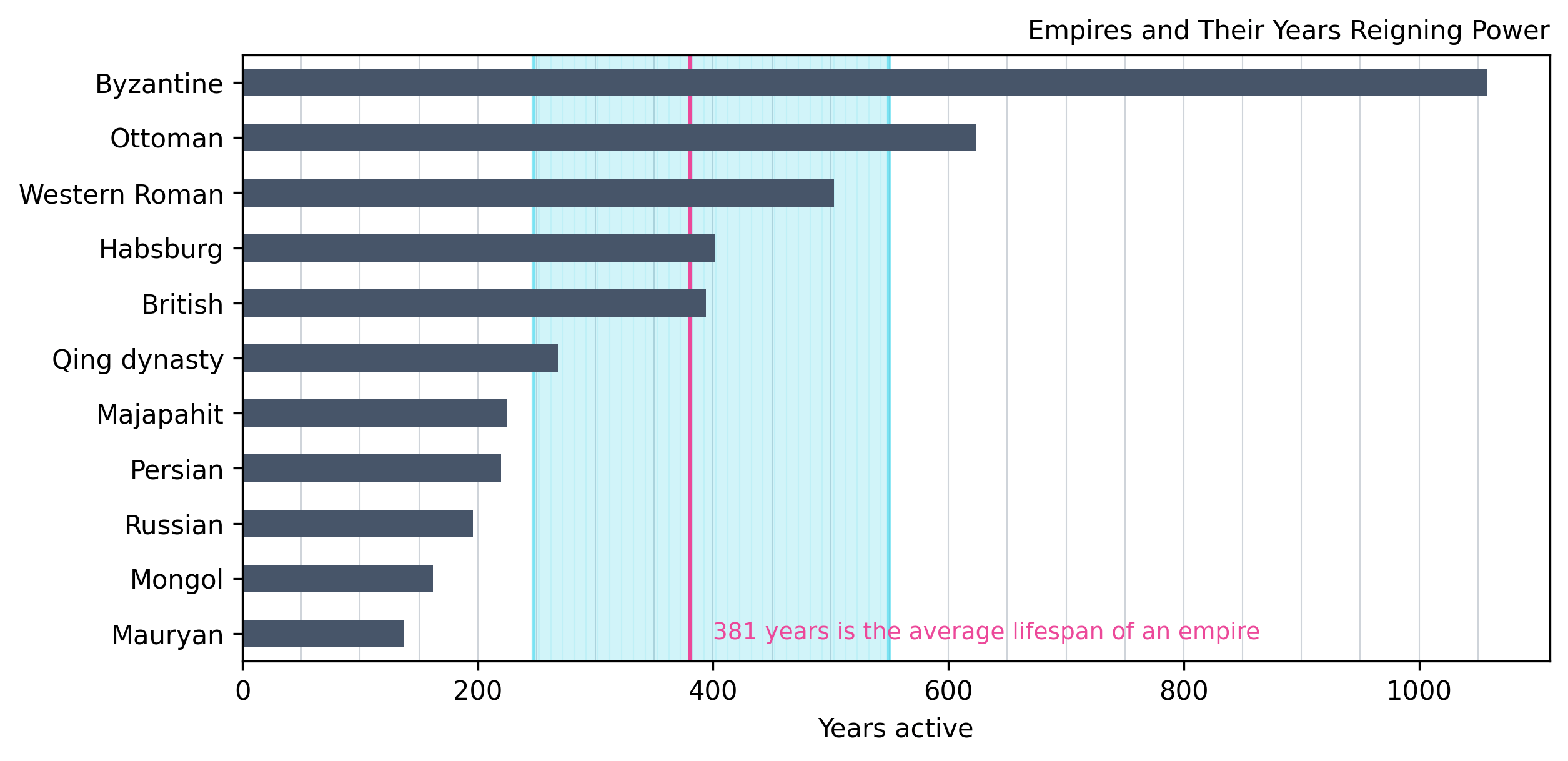

Prediction is an interesting business. It is easier to predict for larger things than for smaller things. Empire, perhaps one of the single biggest units of measurement, could be approximated to last for an average of ~400 years with a 95% confidence interval between 247 to 550 years after resampling the measurements for 14,000,605 times15. However, we still cannot predict what the weather will look like for the next month. The difference between 1 month and 400 years is roughly 400 years apart16, and yet we struggle with the smaller one.

Rath ran A.L.I.C.E. for a single instance and it cultivated a strictly law-abiding medieval human civilization led by a somewhat corrupt pontifex and a corrupt senate at the helm. Probably it was a few hundred years away from the industrial revolution. If Rath was not infiltrated by a foreign adversary, the simulation might have concluded and perhaps was rerun several times over. In the interest of exercising our imagination, how confident are we at guessing that this simulated human civilization would mirror ours? Our collective history has been a linear series of events where branches of path not heeded are lost.

The Average Solutions

In many iterations of Rise of Nations I played, they all ended with a nuclear fallout (and my overwhelming victory). However, that is hardly surprising for Rise of Nations gameplay because winning the game constitutes annihilating the enemy. If I were to say I resampled Rise of Nations for hundreds of iterations and they all ended the same—nuclear catastrophes ruled the predetermined outcome—indeed, casts a somber shadow on the fate of humanity. Drawing this conclusion is a bridge too far, but this exercise in speculating the average fate of civilizations across many iterations approaches a converging solution.

I wondered if our linear humankind history has been the most optimal, most average solution. This line of thought actually could propose two theories:

- If humanity and human civilizations can be parameterized into mathematical equations, one of those factors would be self-destruction17.

- A destruction is a reset instead of an endpoint; the destructions the earth has seen have only differed in the causes.

Parameterization of human civilization is a fascinating topic, however intractable it is. Approximating from my experience in coding/programming, computer programs have the benefit of running inside a closed system. External cues will not change the outcome of computations, at least not meaningfully to a point it would disproportionately crash the said programs18. Human civilizations exist in a very much open system where external factors exert an inordinate amount of influence. Coming up with a mathematical equation to describe a civilization is probably a futile exercise, let alone accounting for millions of individuals forming hierarchical societies in a civilization, with each individual having their own parameters (probably in the hundreds), and then tack on it the parameters defining the mechanics of weather and climate.

I thought it was intractable, then I looked into the state of large language models (LLMs), the technology powering AI chatbots. Their parameters are measured in billions, easily! Interacting with them (albeit in text mode primarily) could achieve a near human-like experience. Combine this with the aforementioned Generative Agents, scale the system up to hold hundreds to tens of thousands of Agents, and simulate a world the way Rath did it for A.L.I.C.E., I think we now have the fundamental recipe to discover the parameters defining human civilizations, and in turn, compile those parameters into models.

Re-Iterating Branches of the Pasts

I am convinced I am not the only on twiddling my thumbs lamenting upon paths of the past neither taken nor considered. One of many paths I could have steered into but did not was that I never had any formal education in computer science despite my undying interest for it. I approached it in the fall semester of 2014, only to chicken out for the fear it would tank my grades. Fast forward to 2024, computer science graduates struggle to land a job amid a highly competitive and tightening job market in the IT industry. It is an uncanny feeling to think about this.

I am not looking to go back to the past nor to jump into an alternate timeline wishing for better outcomes that I could have secured. This whole essay is an exercise in imagination, and my interest in the other branches of reality is, as of today, a purely academic interest. One part of me is dying to know what could have transpired had I taken an action (or not) for something critical in the past, and another part of me dying to know what could have happened in the other branches of realities had histories heeded all the possibilities at every turning point.

Last but not least, this essay was brought to you by: (a) my constant existential crisis, (b) the outburst of reading about bootstrap resampling several years ago, (c) Sword Art Online series, (d) large language model hype, and (c) the desire to understand human histories.

Footnotes

-

For the uninitiated, Minecraft is a popular video that belongs to the world-building and survival genre, played from the first-person view. True to its name, it is about mining and crafting. Mostly. And I didn’t know why I needed to write this footnote. ↩

-

Which I possess very little of, but thank god time moves faster in this kind of video games. ↩

-

But not turn-based variants, no. Yes Sid Meier’s Civs, I am looking at you. I tried Sid Meier Civ 5 once and that did not last very long. ↩

-

Don’t bother hand-coding it if you don’t have to, the

seabornlibrary can do that. But if you must, see my GitHub repo ataixnr/razor. The reason why I learned it was because I needed to implement one for LOESS/LOWESS smoothing algorithm. If I recall it correctly, I attempted this back in 2021. ↩ -

This was during the Alicization arcs, and they were discussing a technology called Soul Translator. The premise of this arc actually serves as the foundation of my thought on this topic. ↩

-

Park et al. 2023, arXiv, Generative Agents: Interactive Simulacra of Human Behavior (doi: arXiv:2304.03442). See GitHub: joonspk-research/generative_agents. ↩

-

In the world of large language models, which powers the likes of ChatGPT, Bard, etc., words are stored in vectors called embeddings. The words that are potentially similar in context (e.g. apple and orange) may be placed closer together in this vector space. Altogether, embedding allows for ChatGPT to figure out how to string words & sentences coherently into expressions that make sense. ↩

-

Sure, if the field continues training more data and fine-tuning models, the accuracy will go up. But, at the time of writing, some of the Large Language Models (LLMs) hallucinate when asked with a seemingly difficult question. If you asked them to give you sources on a specific piece of knowledge, ask for the source, and track the sources down. ChatGPT got caught hallucinating by synthesizing fake sources. ↩

-

Or accurately, per anime, they full-dived into this fantasy world. Full-diving means linking one’s consciousness directly to a virtual reality. It would be for another write-up to discuss the specifics of the technology, and also, I am no expert. If you want to look it up, the system for full-diving is called Soul Translator. It is a fascinating read. ↩

-

If you haven’t watched this season, sorry for the spoiler. Haha. But, the way it was halted was the drama! ↩

-

In short, the idea is if we have a small set of observations (say, n=12), we cannot really make a conclusion about the entire population from this small observation. So, we balloon it up. Artificially. Despite that, it is known to be robust. ↩

-

Or if you prefer to count in hours, that amounts to approximately 57,193,064 hours. The longest non-stop flight is from JFK to Singapore Changi Airport that is airborne for 18 hours. Within 57,193,064 hours between Sumer and today, one could have flown back and forth from Singapore to JFK for approximately 3,177,939 times (I seriously do not know why you would want to do this). If you insist, I recommend taking the business class. Your back will thank you. If you are not a fan of flying and prefer to complete Final Fantasy 7 Remake (FF7R) within 57,193,064 hours (a reasonable hobby to kill time, perhaps less expensive), you could fit 476,609 playthroughs if you are a completionist. ↩

-

Dr. Stephen Strange peeked into the future 14,000,605 times during the war against Thanos in Marvel’s Avengers. He had the time stone. I did not. Even if I had the time stone, I probably would not tell you. ↩

-

I am not kidding. I was this close to (a) setting up a mining rig with my puny Nvidia GPU, and (b) purchased my first Bitcoin when I was 16. ↩

-

In the interest of being transparent, I checked the numbers several times across several LLMs. And fun fact: my python function cannot run bootstrap resampling for 14 million iterations within a reasonable amount of time, i.e. few short minutes. I had to write that function in Rust using PyO3 and Maturin, which then took me a little close to a second to complete. ↩

-

I actually did the math… and if you predicted I was giggling when I wrote this sentence, congratulations because you were right. ↩

-

Oh boy do I not want to talk about this. Humanity has had a streak of self-owning in the interest of questionable (?) idea self-preservation. Nuclear arms race of the Cold War should come first to our mind. In recent years alone, seeing the way countries across the globe voted for government officials indeed raised many questions. However, this reality of ours suffers from information asymmetry. A chess is a game of perfect symmetry between the pieces and the observers, but the reality we live in today is a fog that may never go away. ↩

-

To draw a very, very contrived example: a person sneezing six feet away from your computer will not change “1 + 1 = 2” executed by your CPU. A person sneezing non-stop, on the other hand, has a non-zero probability to initiate an outbreak. ↩